Model Context Protocol (MCP) is a universal standard that lets AI applications easily connect with external data and tools. This article explores why MCP matters, how it works, and where it’s leading the AI industry.

Introducing Torii’s MCP Server – Now on npm!

Unlock direct integration with your application right from Claude or Cursor using our brand-new MCP Server. Get started in seconds—simply install our package from npm and experience seamless connectivity.

👉 Install Now:

npm install @toriihq/torii-mcpVisit our npm page for more details and documentation.

The Brick Wall for AI

AI is both amazing and infuriating. You can vibe code a pretty website in minutes, but your phone’s AI assistant still can’t add a task to Todoist.

The fundamental problem for AI is connection.

The first phase of AI tools operated in isolation—able to provide limited capabilities based on training data (i.e., writing a haiku about 90s hip-hop) but incapable of simple tasks between systems, such as answering an email or even searching the web.

The next phase of AI brought some connection. For example, the Open AI Plugin marketplace gave users a glimpse into our agentic future with interesting-but-limited tools. Google’s AI companion was able to assist with knowledge work tasks as long as they stayed within Google’s ecosystem. Since the beginning of this second phase, the number of tools has increased dramatically, and so too has the number of things an AI tool is capable of. But, there is a problem.

Why Not Just Build More Tools?

The “brick wall” of AI doing useful things has been the complexity of building connections. Think of it as an equation.

MxN (M = AI Models and N = Tools)

For every new model (Claude, ChatGPT, Gemini, DeepSeek, etc) and every unique tool (Plugins, 3rd party tools, etc), you multiply the number of integrations necessary to connect them all. This is the primary barrier preventing AI from seamlessly fitting into our daily lives and processes.

No one told me that working in devtools/infrastructure would mean converting MxN into M+N over and over again until the heat death of the universe pic.twitter.com/zNOJ7mY2Z8

— swyx (@swyx) February 25, 2023

But with MCP, that equation changes.

MCP Breaks the Wall

Open standards matter.

- USB-C

- HTML

- LSP

Unless you’re Tim Cook selling power cables in Germany, you recognize that open standards are good. They allow different vendors to contribute to ecosystems for the user.

MCP is an open standard for connecting AI models to service providers and data providers. This allows users to pull in more information and push out more execution, all from their AI tool. Notably, despite being created and announced by the team at Anthropic (makers of Claude), the MCP standard was also adopted by OpenAI.

people love MCP and we are excited to add support across our products.

— Sam Altman (@sama) March 26, 2025

available today in the agents SDK and support for chatgpt desktop app + responses api coming soon!

Returning to our previous equation, the broad adoption of MCP essentially means that the MxN problem becomes an M+N opportunity. Instead of new models and tools multiplying complexity, they will add opportunity.

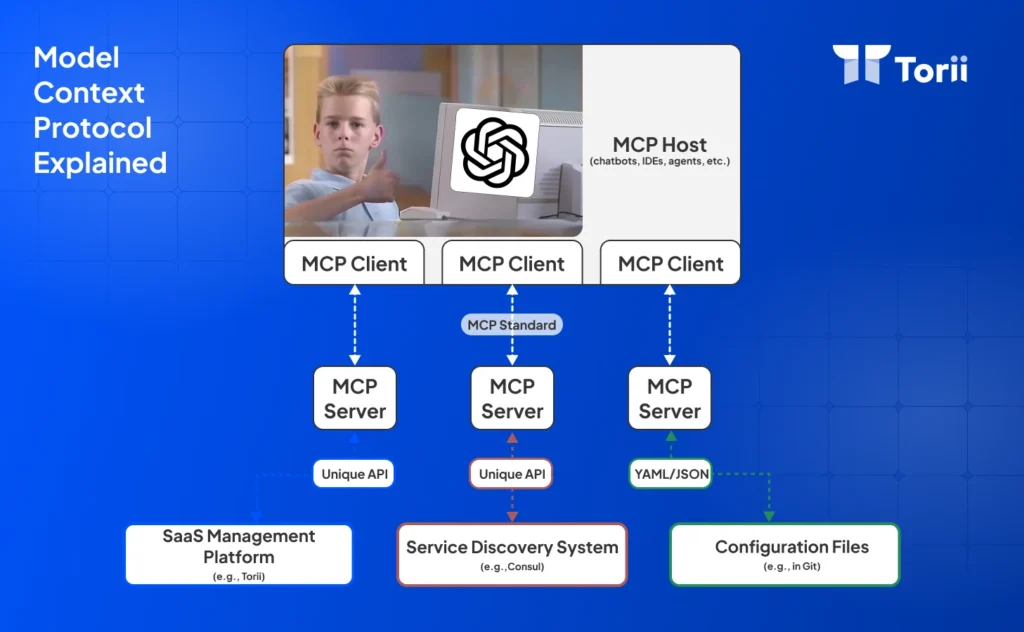

Going Deeper: What is an MCP?

An MCP is an open standard, but how does it work in the real world? The overall process can be broken down into three key roles: Hosts, Clients, and Servers. Understanding each role is essential for learning how an AI application can tap into external tools and data via MCP.

Three Components of MCP

- Hosts: These are applications people directly interact with—chatbots, IDEs, and custom AI agents. From the user’s perspective, hosts are interfaces through which they interact with data—they ask questions and get answers. Behind the scenes, the Host can load multiple MC P clients to connect to different services but this complexity is hidden.

- Clients: The clients act as a middle layer inside of the Host application. Each client manages communication with exactly one MCP server. If your Host needs to talk to multiple servers—one for GitHub, one for Slack, one for Torii—it will spin up three separate Clients. This one-to-one pairing ensures clean connections and simplifies the flow of data.

- Servers: Servers are the external programs that expose capabilities to the AI. The server “wraps” the system or service provider (like GitHub, Slack, or Torii) and translates its functionality into a standardized MCP format. This server is the intermediary between AI and systems that offers a consistent structure so the Host knows exactly how to query the available tools, read data, or prompt the Server regardless of the service that sits behind it.

Note: Importantly, the MCP does not replace an API. Instead, it translates requests so that a Host can access the system without needing to know the exact structure of the underlying API.

Is MCP the Guaranteed Future?

Open standards efforts don’t always “stick.”

- OpenDoc

- X.400 Email Standard

- VRML

All these failed efforts at bringing standardization ran into roadblocks and ultimately failed to create the network effects to succeed.

Often, this results in the company doing the meme.

But if history is any indicator, Anthropic’s MCP has a very good chance of sticking around due to a series of strategic choices, including providing an exceptional spec for MCP, building on existing LSP, and using the capability patterns of existing AI agent development. All of these factors, combined with the backing of AI powerhouses like OpenAI and Anthropic themselves, meant that the initial flywheel of the network effect got off to a strong start.

So, while nothing is guaranteed, few open standards have seen such traction so early.

MCP In Practice: A Real World Example

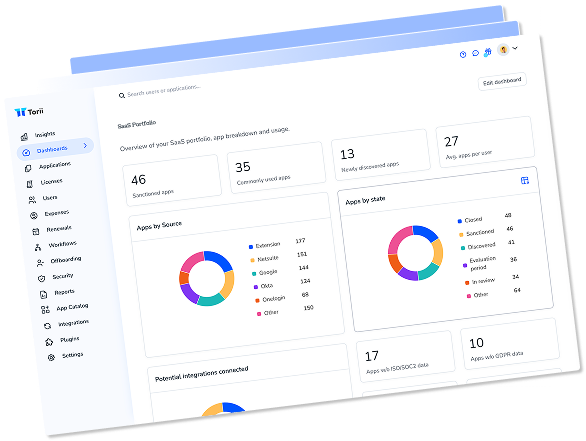

To really understand the power of MCP, let’s look at Torii—a SaaS Management Platform that continuously discovers all of your software (including shadow IT, rogue AI, and sanctioned tools) along with usage data, cost insights, contracts, and potential security gaps.

For many enterprises, Torii is effectively the “central intelligence” for every SaaS application in use.

Traditionally, if you wanted insights about your Salesforce spend, you would access Torii, locate the right application page, and find the information. But now, with MCP, this process becomes frictionless: Any authorized AI application can directly query Torii without a separate login or dashboard. This lets you engage your data where you’re already working and seamlessly incorporate it into the email you’re writing, the slide deck you’re preparing, or the Slack message you’re sending.

Why We Embraced MCP First

We recognized a growing demand from customers who want real-time data available immediately. Rather than building complex integrations with each AI tool, by rolling out an MCP server, the data is now yours when and where you need it.

Below is the code you would add to the config file for Claude to invoke Torii:

{

"mcpServers": {

"torii": {

"command": "npx",

"args": [

"@toriihq/torii-mcp"

],

"env": {

"TORII_API_KEY": "YOUR_API_KEY"

}

}

}

}With that simple update, the user is able to ask questions and make requests like:

- Show me all the apps in my Torii instance

- List users who have admin access

- What contracts are expiring in the next 30 days?

- Show me contracts with renewal dates in Q4

- List all apps that were added last month

This shift in approach gives users a wealth of actionable data at their fingertips.

- Live, Real-Time Insights: Because Torii is constantly scanning your application stack and integrating financial, security, and usage data, the MCP server always reflects the latest state of your ecosystem.

- Breaking Down Silos ( People): Not everyone uses Torii, but basically everyone uses AI. The MCP means that users who would benefit from Torii insights but never log in no longer have to. This is especially valuable for finance teams, procurement teams, and executive leadership.

- Breaking Down Silos (With Tools): Like people, tools don’t always play nice. Implementing MCP allows these tools to operate more efficiently with one another. As you build out your ecosystem of MCP-enabled apps, your AI tool can pull data and push execution without the mess of copying and pasting data

At Torii, we recognize that as a data provider for your critical application insights, we have a fundamental responsibility to ensure your data is available immediately, securely, and effortlessly. By adopting the MCP, we are one step closer to that goal.

MCP Lays the Groundwork

MCP’s adoption and growth lays an exciting groundwork for the future of AI capabilities.

This open standard transforms the complexity of a sprawling MxN equation into a simple M+N solution. Once your AI application is MCP compatible, it can plug into any MCP server, instantly gaining access to tools and resources without additional custom development. Less connecting, more building.

This matters to your organization because the potential for scaling AI-driven solutions without re-engineering each new model or integration saves incredible overhead time and investment, allowing your company to focus on your mission.

Whether you’re a startup or an enterprise, integrating AI into your workflows is faster, more cost-effective, and scalable with MCPs. From accessing software usage data in Torii to pulling insights from internal wikis, MCP slashes the integration overhead and futureproofs your AI stack.

All Hype, No Bite?

Infrastructure moves like this are not sexy. It’s not a ready-made product, and they don’t drive ROI. Instead, they are a promise of potential that’s yet to be realized. If you’ve reached this point in the article, you might still wonder, “So what?”

The “so what” is up to you.

MCP is infrastructure, and good infrastructure doesn’t deliver ready-made products. Instead, it removes a burden of busywork from the builder, allowing them to focus on finding solutions to problems.

The real question is, what should you do with this knowledge?

Conclusion: What You Can Do with MCP

We’re still in the early days of MCP, but there are some important steps you can take today!

For Professionals Looking to Streamline Internal AI Integrations

- Evaluate your current approach: Take a look at how your organization currently connects AI with data. Which tools, databases, or platforms do you rely upon? Identify pain points where custom integrations eat up resources and time.

- Leverage vendors with built-in MCP: If you already work with solutions like Torii or other MCP-enabled platforms, talk to your customer success management about activating MCP features. This can rapidly simplify how you gain insights and actions across your organization.

- Create a unified internal AI strategy: Map out a company-wide plan for adding more MCP-compatible servers and hosts. Identify the data or execution bottlenecks and tackle those first with your AI strategy. Work with vendors to replace multiple custom integrations with a single MCP layer.

For Companies Ready to Adopt MCP in their Product

If you are a data or a service provider, now’s the time to explore the world of MCPs.

- Build your first MCP server: Identify a key feature or data source within your product and design an MCP server around it. This lets AI apps instantly discover and use those capabilities, giving you a unique differentiator.

- Offer a standardized plug-in experience: An OOTB MCP integration makes it easy for potential customers to adopt your product’s functionality within their AI workflows. Emphasize how “MCP-ready” shortens onboarding time and reduces friction for your users’ AI strategies.

If you’ve ever found yourself juggling dozens of one-off integrations, MCP could be the welcome relief you’ve been waiting for. It turns chaotic spaghetti-wire AI hookups into a scalable standard that allows you to spend less time connecting systems and more time using them.