Shadow AI: What It Is & 2026 Vendor Landscape

Generative AI keeps cropping up in workflows across nearly every department. From late-night prompt tinkering to AI subscriptions slipped onto corporate cards, employees adopt new tools faster than policies can adjust, creating a growing shadow tech stack. Security teams often miss the experiments until chat logs, model endpoints, and surprise GPU bills surface during end-of-quarter reviews.

Employees aren’t trying to break rules; they simply want to outrun rivals. Every prompt pasted into a public chatbot risks leaking pricing sheets, customer records, or code snippets into someone else’s model, expanding exposure far beyond one browser tab. The invoice lands much later.

This article maps the Shadow AI landscape and sizes up its hidden costs. It also offers pragmatic governance steps that let teams innovate rapidly while staying well within guardrails.

Table of Contents

- What is Shadow AI?

- Why does Shadow AI happen?

- What's the difference between Shadow IT and Shadow AI?

- Why is Shadow AI an issue?

- How does Vibe-Coding Impact Shadow AI?

- Why Shadow AI Encompasses Chat Prompts

- What are the Legal Issues with Shadow AI?

- What are the top Shadow AI tools?

What is Shadow AI?

Shadow AI refers to the unsanctioned use of artificial-intelligence tools - such as chatbots, generative-image services, and hosted large-language models - outside an organization’s approved security and governance frameworks. Staff often adopt these tools with personal or free accounts to speed tasks, yet in doing so they sidestep data-handling policies, risk assessments, and budget oversight.

Left unchecked, Shadow AI can leak sensitive information to third-party providers, produce unvalidated outputs that drive bad decisions, and scatter proprietary know-how across private workspaces. Industry analysts warn that as AI services multiply, the frequency of incidents stemming from rogue models will rise sharply, mirroring the early chaos of shadow IT.

Why does Shadow AI happen?

Shadow AI appears the moment employees grab an AI tool without an approved purchase order.

Curiosity, tight deadlines, and flashy demos push many workers to fire up a browser tab and start prompting. Once that first request leaves the network, the company inherits a fresh risk surface even if the tool never survives procurement. The model, endpoint, and storage all sit outside the corporate stack, leaving security with no logs, no keys, and no clue what data just slipped away.

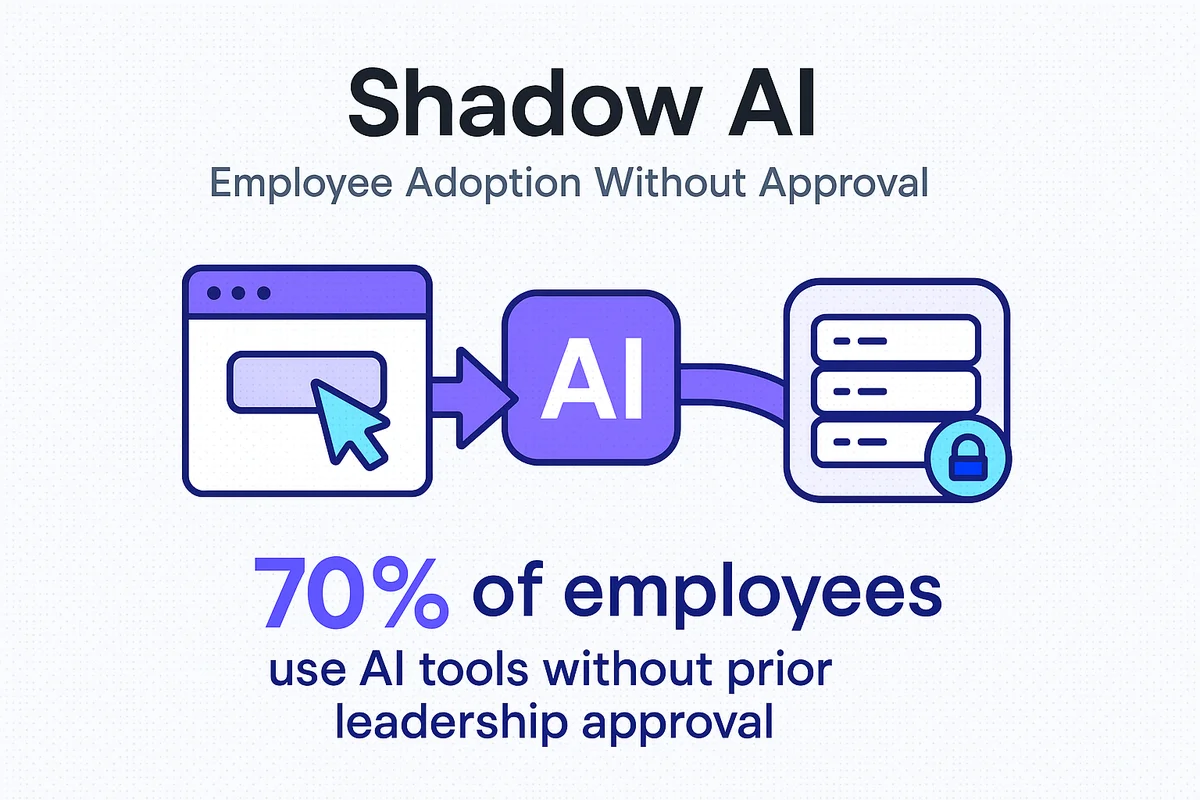

Shadow AI encompasses far more than someone chatting with a public bot. A marketing analyst may fine-tune a small language model in a personal AWS account, while a support rep tests a browser extension that drafts replies through OpenAI’s API. Each scenario falls outside sanctioned budgets yet still touches customer or proprietary data. A 2023 Microsoft Work Trend Index reported that 70 percent of employees already use generative AI at work, and half started without leadership approval; the fastest adoption shows up in product, design, and engineering teams.

In most companies, Shadow AI tends to follow a predictable pattern:

- An individual finds a free or inexpensive AI app and tests it on live data.

- Positive results spread, and peers upgrade to paid tiers on personal cards.

- The tool slips into daily workflows where code, content, or insights become essential to the team.

Early signs of Shadow AI rarely show up on traditional monitoring dashboards. A single HTTPS call to api.openai.com blends in with normal web traffic, and most CASB rules scan for file uploads instead of token streams. Even the Grammarly plugin can capture sensitive drafts sentence by sentence before IT realizes. By the time finance blocks duplicate subscriptions, hundreds of prompts may have seeded external training sets, creating a permanent data trail. Employees generally mean well; they simply want to move faster and delight customers. Governing Shadow AI starts by admitting that good intentions cannot erase a technical footprint that grows with every unchecked keystroke.

What’s the difference between Shadow IT and Shadow AI?

Shadow AI breaks away from classic Shadow IT in both scope and behavior. While a rogue SaaS license lives on one laptop, an unsanctioned large language model can slip into every slide deck, code repo, and board memo it touches. The purchase feels minor, just a quick card swipe, yet the impact ripples through workflows once AI outputs start chaining together.

Traditional Shadow IT mostly stores or moves data; Shadow AI creates new data that others reuse without noticing. A prompt fed into ChatGPT might draft product copy, which marketing pastes into a landing page, which sales clones for emails. Days later nobody remembers the original source, yet the text is already in production and indexed by search engines. Similar loops appear in engineering when Copilot suggestions land in a pull request and survive code review because they simply work. The risk surface moves from a single endpoint to a sprawling web of derivative work.

Accountability becomes slippery once machine-learning models generate multiple plausible answers on demand. An ML model is probabilistic, so the same request can produce ten different outputs, each with its own legal or ethical landmine. When a spreadsheet macro misbehaves, the author is visible. When an LLM writes a financial forecast that misses revenue by 15 percent, the blame diffuses across datasets, temperature settings, and prompt tweaks. Audit trails blur because versioning tools rarely capture prompt history or model parameters.

Key differences that security and compliance teams track include:

- Data lineage turns hazy quickly as outputs propagate across documents, commits, and presentations.

- Model behavior shifts over time, so yesterday’s risk assessment may be obsolete after a silent vendor update.

- Intellectual property exposure grows because generated text or code can embed proprietary fragments from the original prompt.

- Mitigation can’t rely on device control alone; it demands content monitoring and model governance.

Seeing Shadow AI as “just another unsanctioned app” misses these nuances and leaves gaps big enough for costly leaks, fines, and brand damage.

Why is Shadow AI an issue?

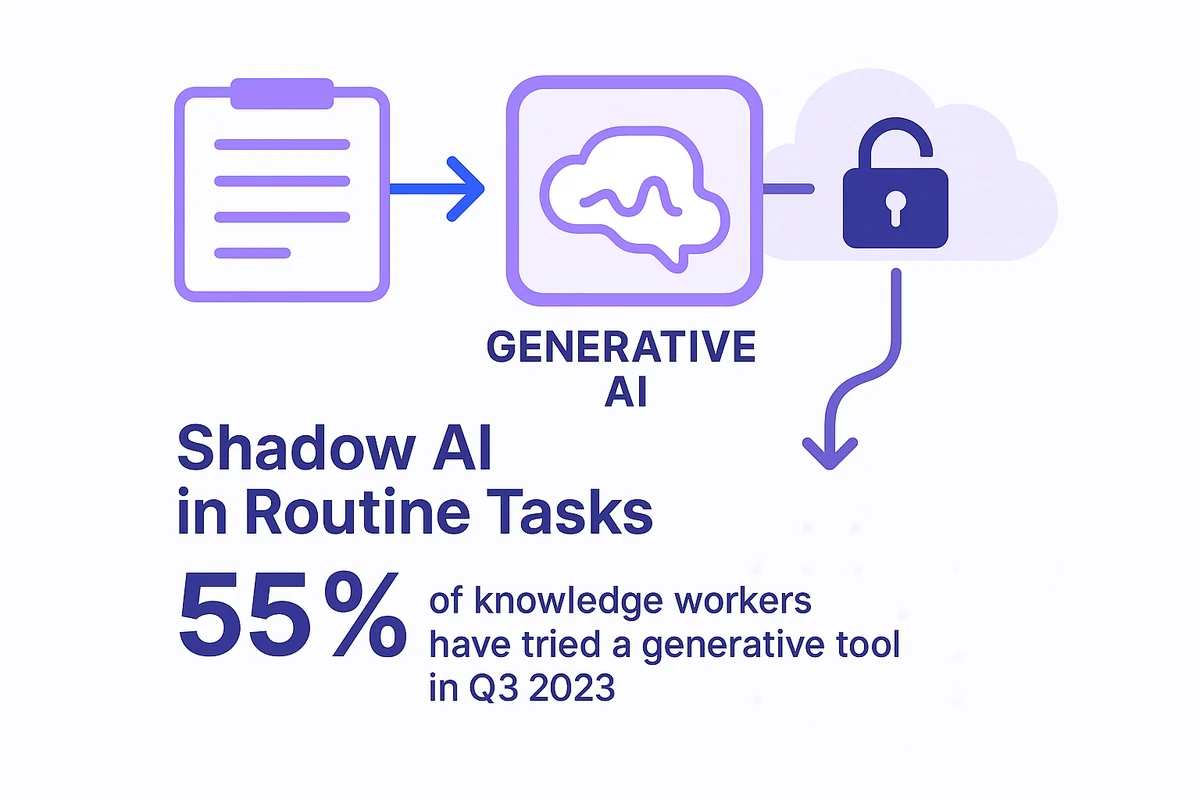

Shadow AI shows up in routine tasks that seem harmless at first glance. When employees paste internal content into public generative models, sensitive data can leave company borders and remain stored in external systems indefinitely.

Usage is rising faster than most security teams can refresh their dashboards. Gartner found that 55 percent of knowledge workers tried a generative tool in Q3 2023, up from 38 percent just two quarters earlier. Each interaction calls remote APIs that log text, images, or code unless settings are changed, a step many users skip.

Design teams often upload campaign files into image generators like Midjourney, unaware that the tool’s terms grant a broad license to train on those assets. Creative that once sat only in a secure Dropbox folder now feeds a public model that thousands of strangers will also shape.

Developers rely heavily on GitHub Copilot, and the platform’s suggestions can slip open-source–licensed snippets into proprietary codebases, creating legal and compliance risks that automated scans may overlook.

Typical purchase paths are invisible to finance until the bill hits. One-person subscriptions appear as:

- Software line items on personal credit card reimbursements

- Annual auto-renewals buried in shared team cards

- Pay-as-you-go cloud charges masked as “misc services”

Multiply that across 20 teams and the firm may fund a dozen duplicate seats of the same model without a volume discount, all while key data flows to places no policy ever approved.

How does Vibe-Coding Impact Shadow AI?

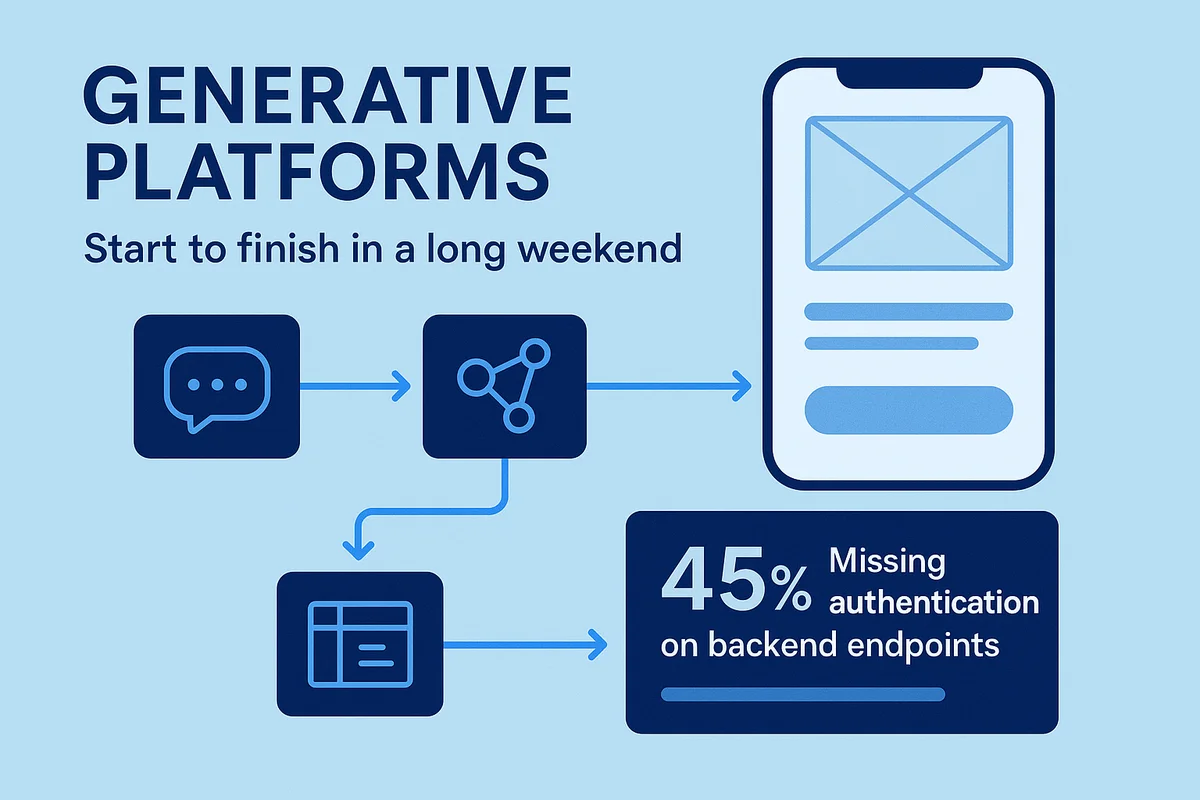

Generative platforms now let anyone stitch together a working app over a long weekend, start to finish.

The effect shows up first in odd corners of a company. Instead of writing traditional code, employees combine ChatGPT prompts, Zapier workflows, and public datasets to deploy customer-facing chatbots in hours rather than sprints. The problem is that no one checks whether the chatbot stores user emails in plain text or if the API key sits exposed on GitHub.

This new habit, often called vibe coding, trades depth for speed. People who have never written a for-loop can now chain prompts and visual connectors to hit production traffic. Seasoned engineers usually catch glaring flaws during code review, but vibe coders push straight to live links, so the first penetration test comes from customers.

Teams keep making the same security mistakes, and a stubbornly familiar list surfaces again and again:

- Missing authentication on backend endpoints that still sit on public URLs

- Hard-coded secrets copied from tutorials and left in repos

- Default database settings that skip encryption at rest

- Broad CORS rules that allow any origin to call sensitive functions

Public metrics confirm that this isn’t just a passing fad. GitHub search results containing “GPT API key” climbed from under 4,000 in January 2023 to nearly 18,000 a year later. Bubble reported 1.4 million total apps in 2023, a 143 percent jump year over year. In a Low-Code Leaders survey, 62 percent of respondents said at least one non-developer in their group had shipped a customer-visible workflow built on an LLM in the last six months.

Speed feels great until something breaks in production and nobody can explain the stack. When interns own runtime incidents, senior staff scramble to unravel undocumented logic hidden inside prompt chains and third-party connectors. The company moves faster, but it also inherits invisible technical debt that balloons with every quick-hit build no one reviews.

Why Shadow AI Encompasses Chat Prompts

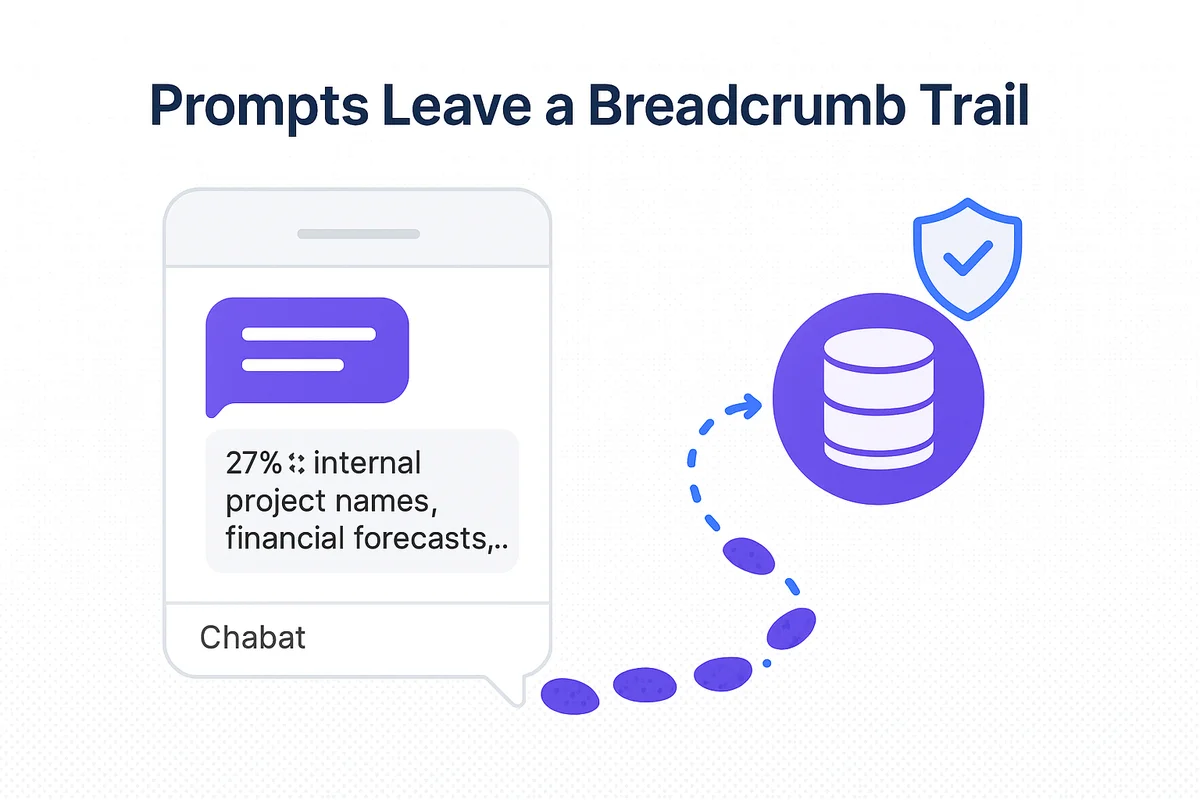

Every snippet or customer record pasted into a chatbot window leaves a breadcrumb trail beyond your perimeter.

Many security teams picture data loss as files walking off the network, yet Shadow AI leaks often start with a single prompt. Large language model providers such as OpenAI retain prompts and completions for at least thirty days, often longer, for what they call “service improvement.” That retention matters because prompts rarely stay generic. A Stanford review of 43,000 enterprise interactions found that 27 percent included internal project names, financial forecasts, or employee identifiers, details regulators classify as protected.

A single breach can quickly slam the door on generative AI use across an entire company. In April 2023 Samsung engineers pasted source code into ChatGPT to debug a firmware issue, and weeks later the company blocked the tool after snippets surfaced in public GitHub gists.

Sensitive details leak in a predictable pattern: curiosity, convenience, click, gone. The specific artifacts may change, yet the flow from helpful prompt to public internet stays remarkably consistent.

- Source code paths, customer emails, and access tokens embedded in multi-line prompts

- Proprietary slide decks converted to text so the model can “summarize” for an exec

- Draft M&A announcements fed in for tone tweaking hours before the deal goes public

Once content sits off-premises, it can be subpoenaed, scraped through new APIs, or appear in someone else’s output. Cyberhaven’s 2023 telemetry shows 3.1 percent of outbound ChatGPT traffic later re-enters the network from unknown IPs, a strong sign that leaked knowledge cycles back through model responses.

Data privacy obligations add another layer of risk to the problem. Under the EU’s GDPR or Brazil’s LGPD, raw prompts count as personal data if they include a name, phone number, or anything traceable to a person. Suddenly, an engineer’s innocent request, such as “Rewrite Janet Smith’s performance review to sound kinder,” creates a regulated record on servers the company does not control. If that provider suffers an incident, the 72-hour breach notification clock starts even though no traditional database was compromised. Jurisdictions pile on: California’s CPRA broadens the scope to cover “behavioral inferences,” a category tailor-made for model training logs.

Minimizing the blast radius starts with trimming context before it leaves the browser. Store local redaction logs and pick vendors that let you opt out of training by default. You cannot seal every prompt, but you can shrink what matters most.

What are the Legal Issues with Shadow AI?

Hidden AI models can still break the law even when no one intends to cheat. Compliance gaps show up not in database leaks but in product claims, hiring filters, and even shipping paperwork. Samsung learned this firsthand when an engineer pasted chip blueprints into OpenAI ChatGPT and accidentally exposed export-controlled technology, triggering a scramble to audit every model touchpoint.

Several regulatory buckets appear once generative tools move into production workflows. Common flashpoints include:

- Export-control rules that classify certain algorithms, weights, or derived content as dual-use technology

- Sector mandates such as HIPAA, FINRA, and FDA marketing guidelines for medical devices

- Advertising standards that ban unsubstantiated claims, even if a model hallucinated them

- Record-keeping laws that require reproducible decision logic in finance and employment

Bias and discrimination claims arrive quickly because LLMs reuse historic patterns. Amazon scrapped an experimental recruiting model after it downgraded résumés containing the word “women’s,” violating equal-employment guidelines that regulators actively police. Getty Images sued Stability AI over copyrighted photos, illustrating how a single prompt can generate thousands of infringing assets in minutes, and each one carries a potential statutory penalty. When Air Canada’s customer-service bot promised refunds it could not legally deliver, the airline had to honor the claims, showing that liability sticks even when the response comes from code.

Forthcoming legislation raises the stakes for every hidden model now deployed in business workflows. The EU AI Act will require a public inventory of “high-risk” systems, mandatory conformity assessments, and clear human oversight; hidden tools will either need rapid documentation or outright shutdown. In the United States, the SEC already treats unchecked language models that draft earnings guidance as potential market-manipulation vectors, hinting at broad enforcement to come. Without a formal intake process, each unsanctioned subscription risks becoming a compliance landmine that detonates long after the monthly charge goes unnoticed.

Can Shadow AI cost the company money?

Shadow AI quietly swells budgets long before finance notices the numbers. Marketing picks up a few Jasper seats, product signs up for ChatGPT Plus, and legal tests Claude Pro, each treating the charge as pocket change. After a quarter, lines that looked like coffee runs total five figures, and nobody holds the contract to push back on price.

Duplicate subscriptions and overlapping seats create just the first leak. Engineers fine-tuning open-source models often spin up GPU instances on evenings or weekends to avoid slowing shared clusters; at $3.40 an hour for an NVIDIA A100 on AWS, a single side project can burn through $1,000 in a month. Because these workloads run under personal accounts, cloud-cost dashboards never flag the spike, and finance cannot match invoices to any outcome.

Another, quieter cost hides inside fragmented data scattered across tools. Every unsanctioned chatbot or auto-generator keeps its own prompt logs and output history, slicing valuable context across dozens of vendor silos. When the analytics team later tries to train a centralized model, they must first hunt down and normalize those shards, delaying deploys by weeks and inflating consulting fees. Missed volume pricing stings as well; list price for ten separate Midjourney plans runs 30 percent higher than a single enterprise agreement with higher rate limits.

Stack enough direct and indirect drains together and the meter spins fast:

- License duplication across business units when seats aren’t shared

- Redundant API calls that bloat token bills on OpenAI or Anthropic

- Rogue cloud instances racking up untracked compute and storage fees

- Fragmented datasets requiring extra ETL work before reuse

- Lost bulk discounts and true-up penalties when vendors spot overages

Finance teams can dodge the frantic penny hunt by treating AI spend like any other strategic investment. Centralized chargeback codes, quarterly vendor reviews, and clear procurement thresholds restore visibility while still leaving room for quick experiments. When employees know where the sandboxes sit, the urge to slip a card in unnoticed fades, and dollars stay tied to results.

How Can a Company Fight Shadow AI?

Governance has teeth only when policies leave static PDFs and show up in daily workflows. A usable AI policy should read like a field guide, not a contract, and help anyone decide in seconds if a prompt is safe. Teams that get this right keep it brief and surface it where work happens:

- Approved AI tools and the data each may touch

- A red-yellow-green risk tier for new services

Examples of banned content: customer PII, financial forecasts, unreleased code

- An escalation email or Slack channel for gray-area questions

Effective training is the only way to turn policy from paper into action. During onboarding, a 15-minute interactive module shows new hires how a stray prompt travels from their browser to an LLM provider’s training set. Later, a Slack bot drops timely reminders when someone tries pasting more than 500 characters into an external site. Quarterly “prompt-phish” drills, mock inputs seeded with fake secrets, test vigilance and feed an internal leaderboard that turns security into a friendly game.

Even with guardrails in place, employee curiosity still needs a safe outlet. Offering an AI sandbox backed by pre-approved models lets staff explore without risking their production data. Logs stream into the SIEM so security can spot patterns, while throttled network egress blocks large dataset exfiltration.

Regular feedback loops are the only way to keep the guardrails relevant. An AI council that meets monthly reviews new vendor pitches, captures lessons from incidents, and pushes micro-updates to the policy. Public shout-outs for employees who surface risks, paired with visible metrics, such as weekly charts of sanctioned versus shadow interactions on a Confluence page, help the workforce view governance as a shared win rather than a speed bump.

How Can a Company Find Shadow AI?

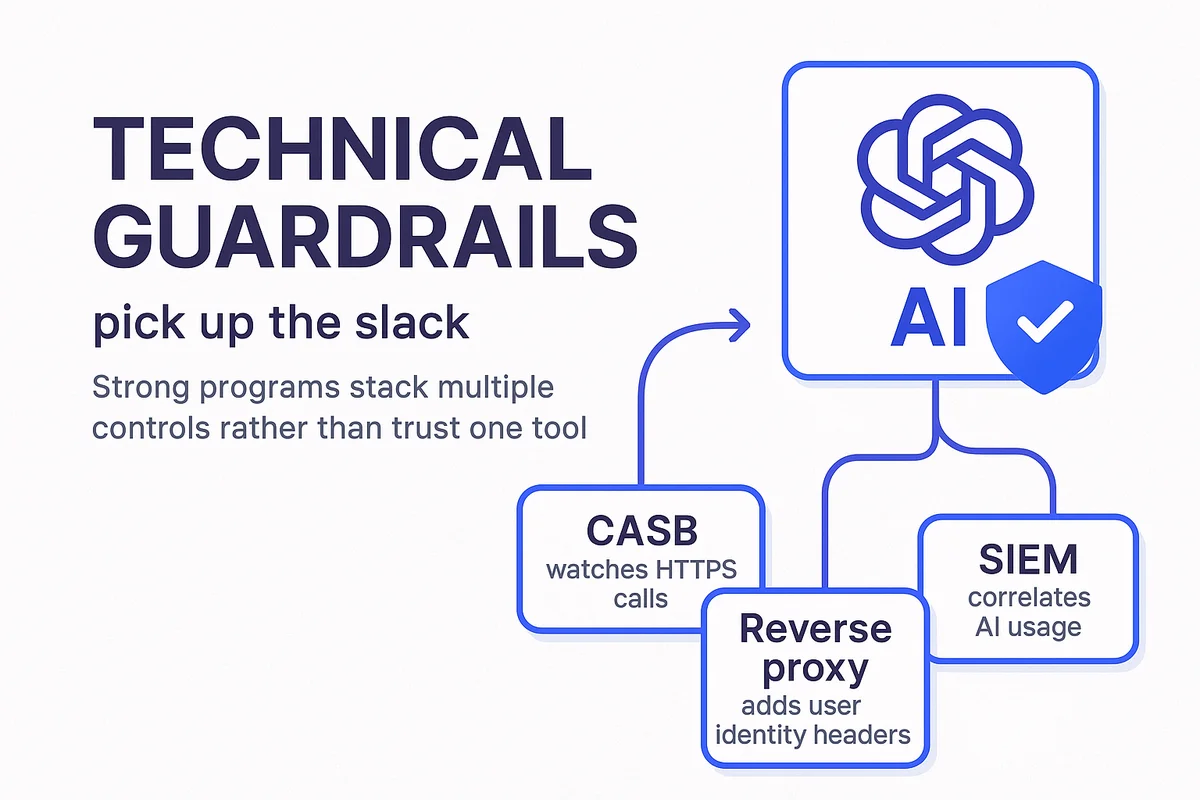

Technical guardrails pick up the slack when employees start experimenting with new AI tools. Security wants one thing: watch every interaction with a model in real time and stop sensitive data from escaping.

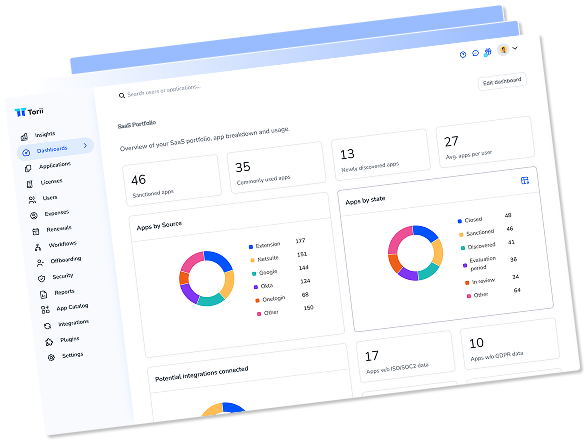

SaaS management platforms show security teams a live map of every paid or free service on the network. When they detect a sudden spike in traffic to api.openai.com, the platform automatically flags the owner, cost center, and who put it on the corporate card. That context feeds risk scores directly into purchasing workflows so rogue subscriptions get paused before renewal.

Strong programs stack multiple controls rather than trust one tool.

- A CASB watches HTTPS calls to AI endpoints and flags forbidden payloads on the spot.

- A reverse proxy adds user identity headers so logs point to real names.

- The SIEM correlates AI usage with other alerts, catching odd volumes and late-night spikes.

- An approval bot in chat asks for a quick business reason before handing out an API key.

Cloud Access Security Brokers add another layer by reading payloads headed to model endpoints one character at a time. With regex tuned for patient data, a Zscaler policy can quarantine an upload to Claude in under 300 milliseconds without cutting off the session. Those detailed logs go to the SIEM for threat hunting and board-level metrics.

Visibility only matters if it drives action, so tight integrations push discoveries into ticketing tools. An Okta workflow can require manager and security sign-off before anyone connects a new AI SaaS to the corporate single sign-on, trimming shadow spend immediately. Over time the data builds a feedback loop, showing which policies need tweaks and which teams deserve sandbox capacity instead of blanket blocks.

The Top 5 Shadow AI Vendors

Below is a breakdown of five leading solutions for Shadow AI. Please note that these aren’t mutually exclusive. Some address the problem of Shadow AI visibility, while others monitor threats in real-time. Instead, they should provide a starting point for research about the type of tools to help fight Shadow AI.

Torii

Torii is a SaaS Management Platform that sends security teams real-time alerts when an employee signs up for a new AI tool. This data is mined from browser extensions, contracts, finance tools, SSO tools, and more. Workflows in Torii can also automatically send those employees messages asking them to describe the product and explain how they are using it, allowing security teams to stay on top of new AI tools without manually reaching out.

Pros:

- Torii’s multi-layered discovery uncovers every app, user, and expense across your organization. Usually companies discover 4x more apps across the company than they thought.

- Security teams get on-going alerts for new apps, and you can set up risk scores to ensure high-risk AI tools get flagged immediately

Trade-offs:

- Torii is a SaaS Management tool - not a true InfoSec or Cybersecurity tool. It’s more about providing visibility into the Shadow AI your company is using versus actively preventing threats.

- Torii focuses on AI and SaaS versus internal tooling or on-prem software.

Microsoft Purview

Microsoft Purview is a platform for data governance, security, and compliance that spans Microsoft 365, Azure, on-premises stores, multicloud services, and SaaS apps. It combines cataloging, classification, data loss prevention, insider-risk management, and eDiscovery in a single management experience.

Pros:

- A single console governs and protects data across cloud, on-prem, and SaaS estates, reducing tool sprawl and blind spots.

- Works natively with Microsoft 365, Defender, and Entra ID, streamlining labels, DLP rules, and access controls.

- Browser-based and endpoint DLP block high-risk actions, while insider-risk analytics surface anomalous behavior.

Trade-offs:

- Key features require Microsoft 365 E5 or add-on SKUs, pushing total cost of ownership higher.

- Deepest capabilities center on Microsoft workloads; coverage for non-Microsoft data sources may lag or need connectors.

- Relying on a single-vendor stack may limit flexibility if future strategy shifts away from Microsoft.

Nightfall AI

Nightfall AI is the AI-native data loss prevention and insider risk management platform that protects sensitive data across SaaS apps, GenAI tools, email, endpoint devices, and more. Nightfall’s AI-powered data protection approach allows it to detect and redact sensitive data accurately, reducing false positives and streamlining security workflows.

Pros:

- Industry-first GenAI detectors make the platform a smarter, more scalable way to protect sensitive data in the enterprise with 2x greater precision than legacy DLP, leading to 4x fewer false positive alerts

- Fast setup with Chrome browser plugin or API integrations that can be installed in minutes

- Comprehensive platform coverage including SaaS Security Posture Management, client-side encryption, data exfiltration prevention, and automated response capabilities

Trade-offs:

- While Nightfall AI provides a developer platform to build DLP into custom applications, this functionality comes at an additional cost.

- Although Nightfall AI excels at detecting and preventing data loss, its monitoring capabilities may be limited compared to other DLP solutions.

Island Enterprise Browser

Island delivers a Chromium-based enterprise workspace where security, data governance, and productivity tools live directly in the browser. Conditional access controls, zero-trust network access, and granular data policies give security teams visibility over every action, while users keep the familiar Chrome-like experience.

Pros:

- Native threat protection, ZTNA to private apps, and fine-grained data controls stop phishing, malware, and leakage without extra agents.

- Logs every session for compliance and streams telemetry to existing platforms for forensics.

- Enables secure access on unmanaged devices and can replace VDI for remote users.

Trade-offs:

- Replacing the default browser requires onboarding and ongoing policy tuning; some users face a learning curve.

- Annual pricing starts around $25k, which may deter smaller teams until the MSP model matures.

- Focuses on security and compliance; does not address real-time SaaS discovery

Cisco Umbrella

Cisco Umbrella is a cloud-delivered security service that blocks risky generative-AI domains at the DNS layer. It inspects every DNS, IP, and HTTP request to flag new AI endpoints within minutes, enforcing policy before any device reaches an unvetted model.

Pros:

- Detects emerging AI domains quickly, informed by 620 billion daily DNS lookups

- Lets admins set risk scores and granular policies for each AI tool

- Integrates with SecureX, CASB, SIEMs, and Umbrella Investigate for broader incident response and forensics

Trade-offs:

- Full content inspection still depends on SecureX or third-party tools

- Fine-tuning policies to balance innovation and control takes ongoing effort

- Focuses on security, not SaaS spend or broader IT automation

Conclusion

Shadow AI slips into workflows faster than most leaders realize. Our tour through its lines, use cases, and growing “vibe-coded” apps showed how easily teams trade control for speed. We mapped the fallout, including data leaks, compliance gaps, and extra spending, then laid out people-first policies, sandboxes, and real-time monitoring that cut risk without killing curiosity. The message is plain: acknowledge the behavior, guide it, and watch innovation bloom safely.

Treat shadow AI as an impulse, not a rebellion, and channel it through guardrails before it channels you.

Audit your company’s SaaS usage today

If you’re interested in learning more about SaaS Management, let us know. Torii’s SaaS Management Platform can help you:

- Find hidden apps: Use AI to scan your entire company for unauthorized apps. Happens in real-time and is constantly running in the background.

- Cut costs: Save money by removing unused licenses and duplicate tools.

- Implement IT automation: Automate your IT tasks to save time and reduce errors - like offboarding and onboarding automation.

- Get contract renewal alerts: Ensure you don’t miss important contract renewals.

Torii is the industry’s first all-in-one SaaS Management Platform, providing a single source of truth across Finance, IT, and Security.

Learn more by visiting Torii.

Frequently Asked Questions

Shadow AI refers to the unauthorized use of AI tools outside an organization's official security protocols, often leading to data leaks and compliance risks.

Shadow AI emerges when employees use AI tools without official approval, often driven by urgency or curiosity, exposing the organization to unforeseen risks.

While Shadow IT focuses on unauthorized data storage, Shadow AI creates new data without oversight, spreading risks more broadly in workflows.

Shadow AI can lead to sensitive data leakage, costly compliance violations, and untracked expenses, often hidden until revealed by financial audits.

Effective Shadow AI management includes creating clear policies, providing training, and facilitating sandbox environments for experimentation while monitoring usage.

Shadow AI can inflate budgets through duplicate subscriptions, hidden costs, and unmonitored usage of resources, leading to significant unexpected expenses.

Companies can utilize SaaS management tools that monitor real-time software use, flagging unauthorized AI tools and generating alerts for management.

The top Shadow AI tools include Torii, Microsoft Purview, Island Browser, Cisco Umbrella, and Nightfall AI