5 Questions to Evaluate the Risk of an AI Tool in 2026

AI vendors often promise quick wins and lower costs, yet procurement teams care more about the risks behind each claim. When sensitive data, regulated workflows, and unpredictable usage fees converge on a single platform, legal, security, and finance leaders must dissect the architecture before any contract is signed. Skipping that analysis can turn a harmless pilot into an expensive, non-compliant mess.

Evaluation checklists often miss edge cases that later dominate incident reports. Five focused questions keep the discussion grounded in facts, covering the data lifecycle, technical safeguards, legal duties, budget swings, and who holds the keys. Tying each question to hard evidence, flow diagrams, test results, or contract language, lets teams judge vendors on the same scale instead of relying on polished demos.

Start with those five questions, and you can move quickly without stacking up extra risk.

Table of Contents

- How does the AI tool handle sensitive data?

- What security controls protect the AI infrastructure?

- Does the vendor meet compliance requirements?

- What is the total cost and risk?

- How granular is user and admin access control?

- Conclusion

- Audit your company's SaaS usage today

How does the AI tool handle sensitive data?

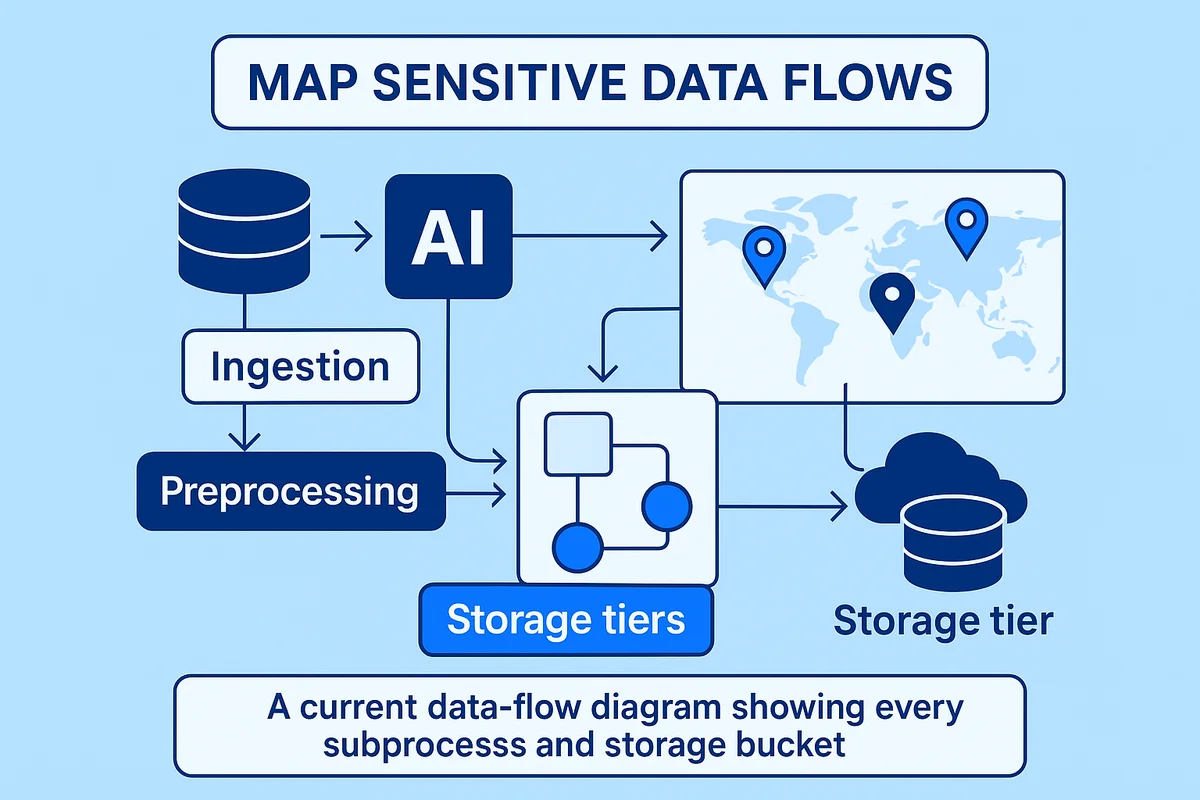

Start every AI pilot with a clear map of where sensitive data moves inside the product. Capture each hop such as ingestion, preprocessing, model training, storage tiers, backups, and disposal before a single record leaves your network. Without that map, spotting shadow copies or silent retention that later trigger privacy headaches becomes almost impossible.

Ask the vendor to pin every leg of that journey to a physical location. If your firm serves EU clients, you need to know whether the raw files sit in Frankfurt, get mirrored in Virginia, or move again for disaster recovery. Location clarity also guides retention: seven years for regulated records may be overkill for chatbot prompts that lose value after 24 hours.

Here are artifacts a mature provider should hand over without hesitation:

- A current data-flow diagram showing every subprocess and storage bucket

- Documented data-minimization logic, preferably enforced in code repositories

- Encryption details for data at rest, including key rotation intervals

- Privacy certifications such as ISO 27701 and a recent SOC 2 Type II report, with OpenAI releasing one for ChatGPT Enterprise in 2023

- Separate tenancy or segmentation proofs that keep customer datasets isolated

Even with strong documentation, decide who inside your company must bless the risk. Many organizations route a Data Protection Impact Assessment through legal, security, and the data-governance council, yet skip procurement, creating mismatched contracts later. Set a rule: no purchase order until the DPIA owner, typically the privacy officer, signs the final lifecycle diagram. That single gate keeps everyone honest about how the tool will collect, store, and securely dispose of data when the project or the vendor relationship ends.

What security controls protect the AI infrastructure?

Solid code matters little once attackers compromise the model’s foundation. Security improves when you treat the model, its serving layer, and each API call as exposed surface, not hidden plumbing.

Start with the basics: how the system safeguards data in motion across every hop. Does every request travel over TLS 1.3, and can the vendor prove forward secrecy through managed certificate rotation? A provider like Google Cloud offers customer-managed encryption keys; if your candidate can’t match that, you inherit needless escrow risk. Make sure logs stream into your SIEM almost immediately so anomalies, such as sudden token spikes or calls from unfamiliar regions, surface before attackers do.

Evaluate safeguards with the same scrutiny you give a payment gateway:

- Architecture diagrams that spell out where the model weights reside and who can reach them

- Evidence of quarterly vulnerability scans plus annual CREST- or OSCP-led penetration tests

- Rate limiting and input cleansing that slows prompt injection or rejects malformed payloads

- Options like VPC peering that keep inference traffic off the public internet

Incident response capabilities quickly separate dependable partners from hopeful startups. Ask how long they need to patch a critical CVE; 24 hours is routine for mature SaaS shops, while some AI entrants still quote seven days. Demand clear separation between development and production, because one engineer who deploys code and manages secrets invites silent privilege escalation. Dismiss any vendor unwilling to share a runbook or the summary of its last pen test.

Carefully map every promised control to your own shared-responsibility matrix. When the dashboard advertises “one-click key rotation,” verify it connects to your HSM and triggers an alert to security. The verdict should be simple to defend: either the platform integrates with existing detection and response workflows or it creates another blind spot that resurfaces at the next board meeting.

Does the vendor meet compliance requirements?

A flashy AI demo means nothing if the vendor can’t clear the compliance hurdles your auditors care about.

Confirm that the provider already aligns with the frameworks you follow, such as GDPR, HIPAA, PCI DSS, or FedRAMP. A missing SOC 2 Type II or HIPAA attestation can hold the whole deal in limbo longer than any contract redline. Ask whether the vendor’s auditors tested the model pipeline itself, not just the surrounding network, because regulators increasingly treat training data as in-scope information.

Cross-border data traffic adds another layer of risk for any AI rollout. If user prompts leave the EU, the vendor should back that move with Standard Contractual Clauses and, when possible, local processing nodes from platforms like AWS or Azure. Audit rights must give your team access to evidence, including subcontractor logs, within a reasonable window. No sub-processor list, no deal. The Data Processing Agreement should spell out who owns incident notification timelines and breach liabilities.

New privacy laws keep arriving faster than most teams can track them. California’s CPRA rules began updated enforcement in March, and the EU AI Act may follow before your renewal term ends. Lock in a contract addendum that forces the vendor to meet any new legal standard within a set grace period. Then track gaps the same way auditors do:

- Map each requirement to the control that satisfies it

- Flag missing or partial controls for remediation

- Assign an internal owner and target date for each gap

- Store evidence in a central folder your auditors can reach

Finish the review with a rolling compliance gap matrix that everyone can read at a glance. One spreadsheet, updated quarterly, shows executives where residual risk sits, how much it costs to close, and when it will disappear. When procurement sees that matrix, legal questions shrink and budget talks get easier.

What is the total cost and risk?

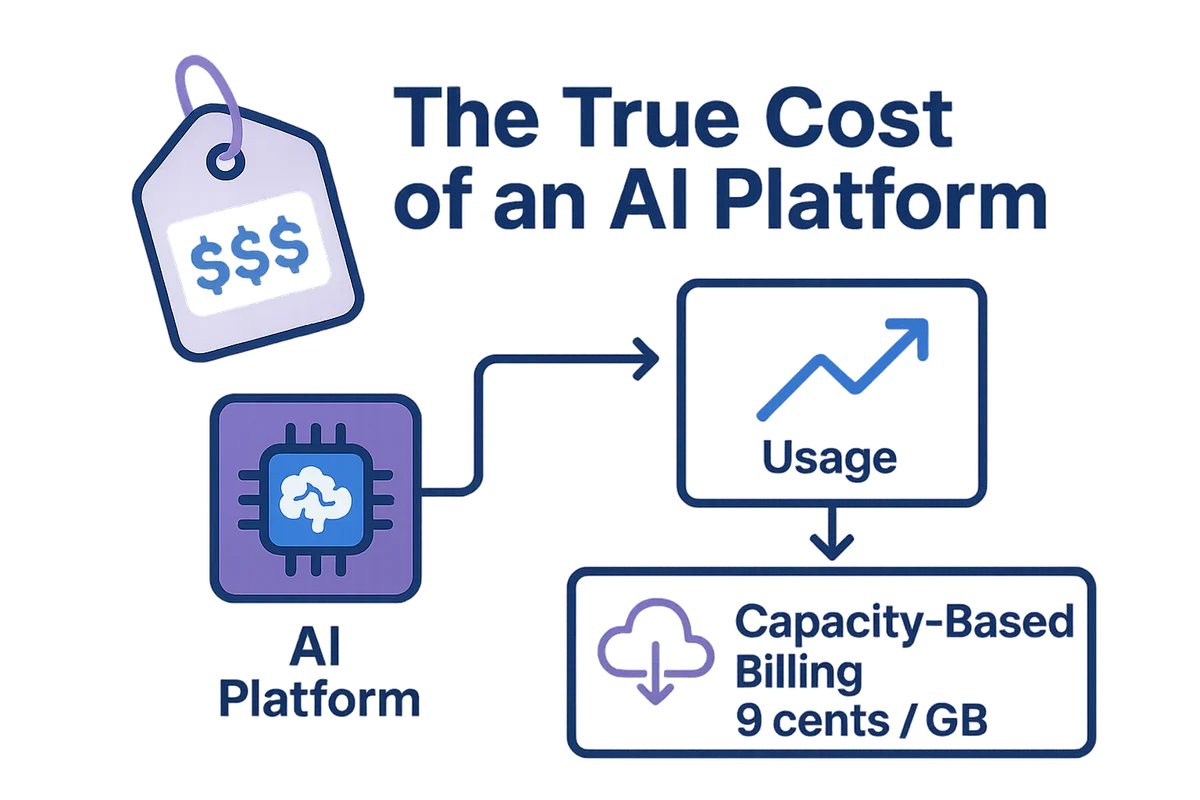

Sticker price alone rarely reflects what an AI platform will truly cost over its lifetime. Subscription tiers hint at expense, but the math shifts once real usage data rolls in and workloads spike. A model that looks affordable during a pilot can double or triple monthly outlay once teams push it into production with heavier API traffic.

Capacity-based billing often dominates the final bill and hides the biggest surprises. Data-egress fees from cloud hosts such as AWS often run nine cents per gigabyte, so exporting inference logs for analytics can quietly add thousands each quarter. Fine-tuning a large language model burns GPU hours that vendors pass through at market rates; during last year’s GPU shortage, several buyers reported weekend surcharges of 25 percent. If the provider throttles baseline calls during peak blocks, you’ll either pay surge pricing or watch applications slow to a crawl.

Integration and upkeep can cost as much as the service itself and deserve equal attention. Building a secure sandbox, wiring the SDK into existing pipelines, then managing version upgrades usually consumes 20 to 30 percent of the first-year budget, according to Gartner’s 2023 TCO survey. Ongoing DevOps support, mandatory penetration tests, and quarterly model revalidation must also be spread across the ledger. Skip that planning and Finance will be hit with follow-on purchase orders every time engineering needs more credits.

Indirect costs, from security incidents to contractual penalties, can quickly dwarf the invoice. IBM pegs the average breach at 4.45 million dollars, and downtime penalties in SaaS contracts often start at one month of service credits per incident. Lock-in clauses raise another flag: if you can’t move your fine-tuned weights off-platform without a hefty exit fee, the switching bill two years later could exceed initial deployment spend.

Before you put pen to paper, run through this five-point gut check:

- Map predicted query volume against every pricing tier.

- Ask for a written cap on data-egress and surge fees.

- Model sandbox, DevOps, and audit expenses alongside license cost.

- Quantify breach, downtime, and exit-migration exposure.

- Align renewal terms with expected model retraining and scaling cycles.

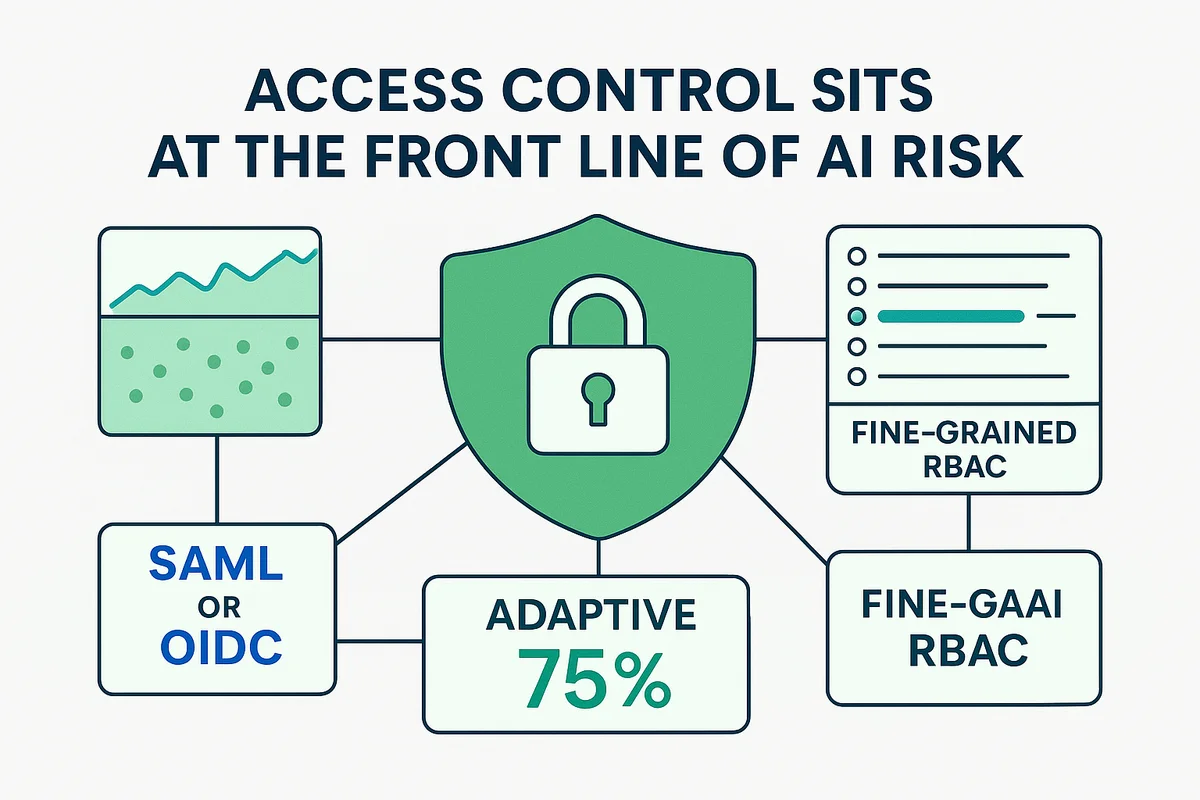

How granular is user and admin access control?

Access control sits at the front line of AI risk; get it wrong and every other safeguard crumbles. The vendor should plug straight into your identity provider so users face the same SSO flow and complete MFA each session. Anything less invites password reuse, shared tokens, and a threat hunter’s worst Monday.

Role-based access control keeps the data scientist out of finance tables and stops a contractor from altering production prompts. Strong tools offer just-in-time roles that appear only when needed and vanish on schedule, while automated off-boarding wipes stale accounts the hour a badge is returned. Ask for clear separation between training, staging, and production tenants; if they share a namespace, be concerned.

Look for these access features before any purchase order leaves your desk or inbox:

- SAML or OIDC for friction-free SSO, verified with Okta and Azure AD

- Adaptive MFA that tightens controls on risky IP addresses

- Fine-grained RBAC down to individual API routes or model versions

- Policy-based data masking that hides PII unless the user holds an approved role

- Tenant isolation built with container or VPC boundaries, not mere table prefixes

Logs carry as much weight in security as the locks on the front door. The platform should export immutable events to your SIEM, whether that’s Splunk or Datadog, within five minutes of creation so you can spot anomalous queries in near-real-time. A solid vendor lets customers hold their own KMS keys, forcing a second administrator to sign any decrypt request and adding a legal audit trail by default.

Schedule access reviews alongside routine maintenance and bake them into the run-book. Quarterly certifications catch privilege creep long before an external audit, while a simple CSV of current roles proves diligence during contract renewals. Combine those attestations with the log feed and you have evidence to satisfy regulators, insurers, and the board without extra spreadsheet gymnastics.

Conclusion

Choosing an AI platform based only on buzz can expose your team to costly surprises. Focus on five checkpoints: data handling, security controls, compliance, true cost, and access management. Together these pillars reveal every data path, test every safeguard, tally every expense, and clarify who can enter the system before you sign.

Ask these five questions early, update the answers as conditions change, and the AI you deploy will stay an asset rather than a liability. A careful review today is still the surest way to preserve tomorrow’s value and minimize risk.

Audit your company’s SaaS usage today

If you’re interested in learning more about SaaS Management, let us know. Torii’s SaaS Management Platform can help you:

- Find hidden apps: Use AI to scan your entire company for unauthorized apps. Happens in real-time and is constantly running in the background.

- Cut costs: Save money by removing unused licenses and duplicate tools.

- Implement IT automation: Automate your IT tasks to save time and reduce errors - like offboarding and onboarding automation.

- Get contract renewal alerts: Ensure you don’t miss important contract renewals.

Torii is the industry’s first all-in-one SaaS Management Platform, providing a single source of truth across Finance, IT, and Security.

Learn more by visiting Torii.

Frequently Asked Questions

The AI tool should provide a detailed data flow diagram and evidence of encryption, retention policies, and privacy certifications, ensuring sensitive data is managed securely throughout its lifecycle.

Security controls should include TLS encryption for data in transit, regular vulnerability scans, and separation between development and production environments to mitigate risks effectively.

Verify that the vendor complies with frameworks like GDPR and HIPAA, and has necessary audit rights and incident notification obligations laid out in the Data Processing Agreement.

Consider not just the subscription fees but also potential data egress, integration, and maintenance costs. Look for transparency in long-term pricing to avoid unexpected expenses.

Access control should include role-based mechanisms, multi-factor authentication, and logging capabilities to ensure robust security measures are in place for sensitive data protection.

A reliable AI vendor should supply documents like data flow diagrams, encryption details, and compliance certifications to demonstrate their capability in managing data safely.

Organizations should create a checklist of critical questions focusing on data handling, security, compliance, cost, and access management to evaluate vendors effectively.